Firecrawl

Home - Firecrawl

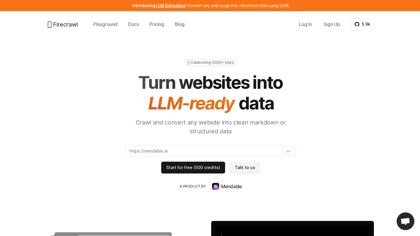

Introduction:

Firecrawl crawls and converts any website into clean markdown.

Firecrawl Product Information

What is Firecrawl ?

Crawl, Capture, Clean We crawl all accessible subpages and give you clean markdown for each. No sitemap required.

Firecrawl's Core Features

Crawl and convert any website into clean markdown or structured data

Crawl all accessible subpages and give you clean markdown for each

Gather data even if a website uses javascript to render content

Returns clean, well formatted markdown

Orchestrates the crawling process in parallel for the fastest results

Caches content, so you don't have to wait for a full scrape unless new content exists

Firecrawl's Use Cases

#1

Training machine learning models

#2

Market research

#3

Content aggregation

Firecrawl's Pricing

Free Plan 500 credits $0 /month Scrape 500 pages 5 /scrape per min 1 concurrent /crawl jobs

Hobby 3,000 credits $19 /month Scrape 3,000 pages 10 /scrape per min 3 concurrent /crawl jobs*

Standard Most Popular 100,000 credits $99 /month Scrape 100,000 pages 50 /scrape per min 10 concurrent /crawl jobs*

Growth 500,000 credits $399 /month Scrape 500,000 pages 500 /scrape per min 50 concurrent /crawl jobs* Priority Support

Enterprise Plan Unlimited credits. Custom RPMs. Talk to us Top priority support Feature Acceleration SLAs Account Manager Custom rate limits volume Custom concurrency limits Beta features access CEO's number

FAQ from Firecrawl

What is Firecrawl?

- Firecrawl turns entire websites into clean, LLM-ready markdown or structured data. Scrape, crawl and extract the web with a single API. Ideal for AI companies looking to empower their LLM applications with web data.

How can I try Firecrawl?

- You can start with Firecrawl by trying our free trial, which includes 100 pages. This trial allows you to experience firsthand how Firecrawl can streamline your data collection and conversion processes. Sign up and begin transforming web content into LLM-ready data today!

Who can benefit from using Firecrawl?

- Firecrawl is tailored for LLM engineers, data scientists, AI researchers, and developers looking to harness web data for training machine learning models, market research, content aggregation, and more. It simplifies the data preparation process, allowing professionals to focus on insights and model development.

Is Firecrawl open-source?

- Yes, it is. You can check out the repository on GitHub. Keep in mind that this repository is currently in its early stages of development. We are in the process of merging custom modules into this mono repository.

How does Firecrawl handle dynamic content on websites?

- Unlike traditional web scrapers, Firecrawl is equipped to handle dynamic content rendered with JavaScript. It ensures comprehensive data collection from all accessible subpages, making it a reliable tool for scraping websites that rely heavily on JS for content delivery.

Why is it not crawling all the pages?

- There are a few reasons why Firecrawl may not be able to crawl all the pages of a website. Some common reasons include rate limiting, and anti-scraping mechanisms, disallowing the crawler from accessing certain pages. If you're experiencing issues with the crawler, please reach out to our support team at [email protected].

Can Firecrawl crawl websites without a sitemap?

- Yes, Firecrawl can access and crawl all accessible subpages of a website, even in the absence of a sitemap. This feature enables users to gather data from a wide array of web sources with minimal setup.

What formats can Firecrawl convert web data into?

- Firecrawl specializes in converting web data into clean, well-formatted markdown. This format is particularly suited for LLM applications, offering a structured yet flexible way to represent web content.

How does Firecrawl ensure the cleanliness of the data?

- Firecrawl employs advanced algorithms to clean and structure the scraped data, removing unnecessary elements and formatting the content into readable markdown. This process ensures that the data is ready for use in LLM applications without further preprocessing.

Is Firecrawl suitable for large-scale data scraping projects?

- Absolutely. Firecrawl offers various pricing plans, including a Scale plan that supports scraping of millions of pages. With features like caching and scheduled syncs, it's designed to efficiently handle large-scale data scraping and continuous updates, making it ideal for enterprises and large projects.

Does it respect robots.txt?

- Yes, Firecrawl crawler respects the rules set in a website's robots.txt file. If you notice any issues with the way Firecrawl interacts with your website, you can adjust the robots.txt file to control the crawler's behavior. Firecrawl user agent name is 'FirecrawlAgent'. If you notice any behavior that is not expected, please let us know at [email protected].

What measures does Firecrawl take to handle web scraping challenges like rate limits and caching?

- Firecrawl is built to navigate common web scraping challenges, including reverse proxies, rate limits, and caching. It smartly manages requests and employs caching techniques to minimize bandwidth usage and avoid triggering anti-scraping mechanisms, ensuring reliable data collection.

Does Firecrawl handle captcha or authentication?

- Firecrawl does not handle captcha or authentication but it is on the roadmap. If a website has a captcha or authentication, Firecrawl will not be able to access the website.

Do API keys expire?

- Firecrawl API keys do not expire unless they are revoked.

Can I use the same API key for scraping, crawling, and extraction?

- Yes, you can use the API key for scraping, crawling, and extraction.

Is Firecrawl free?

- Firecrawl is free for the first 300 scraped pages (300 free credits). After that, you can upgrade to our Standard or Scale plans for more credits.

Is there a pay per use plan instead of monthly?

- No we do not currently offer a pay per use plan, instead you can upgrade to our Standard or Scale plans for more credits.

How many credits do I get with each plan?

- With the free plan you get 300 free credits per month (300 pages scraped). With the Standard plan you get 50,000 credits per month (50,000 pages scraped) and with the Scale plan you get 2,500,000 credits per month (2,500,000 pages scraped). If you think you are going to need even more credits, please contact us.

How many credit does scraping, crawling, and extraction cost?

- Scraping costs 1 credit per page. Crawling costs 1 credit per page. Extraction costs 1 credit per page.

Do you charge for failed requests (scrape, crawl, extract)?

- We do not charge for any failed requests (scrape, crawl, extract). Please contact support at [email protected] if you have any questions.

What payment methods do you accept?

- We accept payments through Stripe which accepts credit cards, debit cards, and PayPal.