Oobabooga

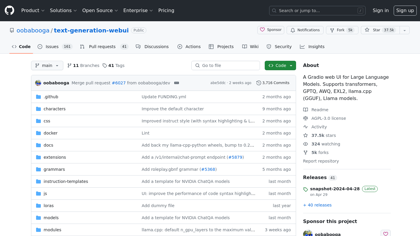

GitHub - oobabooga/text-generation-webui: A Gradio web UI for Large Language Models. Supports transf

Introduction:

A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models. - oobabooga/text-generation-webui

Oobabooga Product Information

What is Oobabooga ?

A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models.